How to Efficiently Fine-Tune CodeLlama-70B-Instruct with Predibase

$ 9.50 · 4.6 (181) · In stock

Learn how to reliably and efficiently fine-tune CodeLlama-70B in just a few lines of code with Predibase, the developer platform for fine-tuning and serving open-source LLMs. This short tutorial provides code snippets to help get you started.

Piero Molino on LinkedIn: Efficient Fine-Tuning for Llama-7b on a Single GPU

Learn how to fine-tun code with CodeLlama-70B 🤔, Predibase posted on the topic

Jeevanandham Venugopal posted on LinkedIn

Fine-Tuning CodeLlama for Advanced Text-to-SQL Queries with PEFT and Accelerate, by vignesh yaadav, Feb, 2024

Predibase on LinkedIn: Privately hosted LLMs, customized for your task

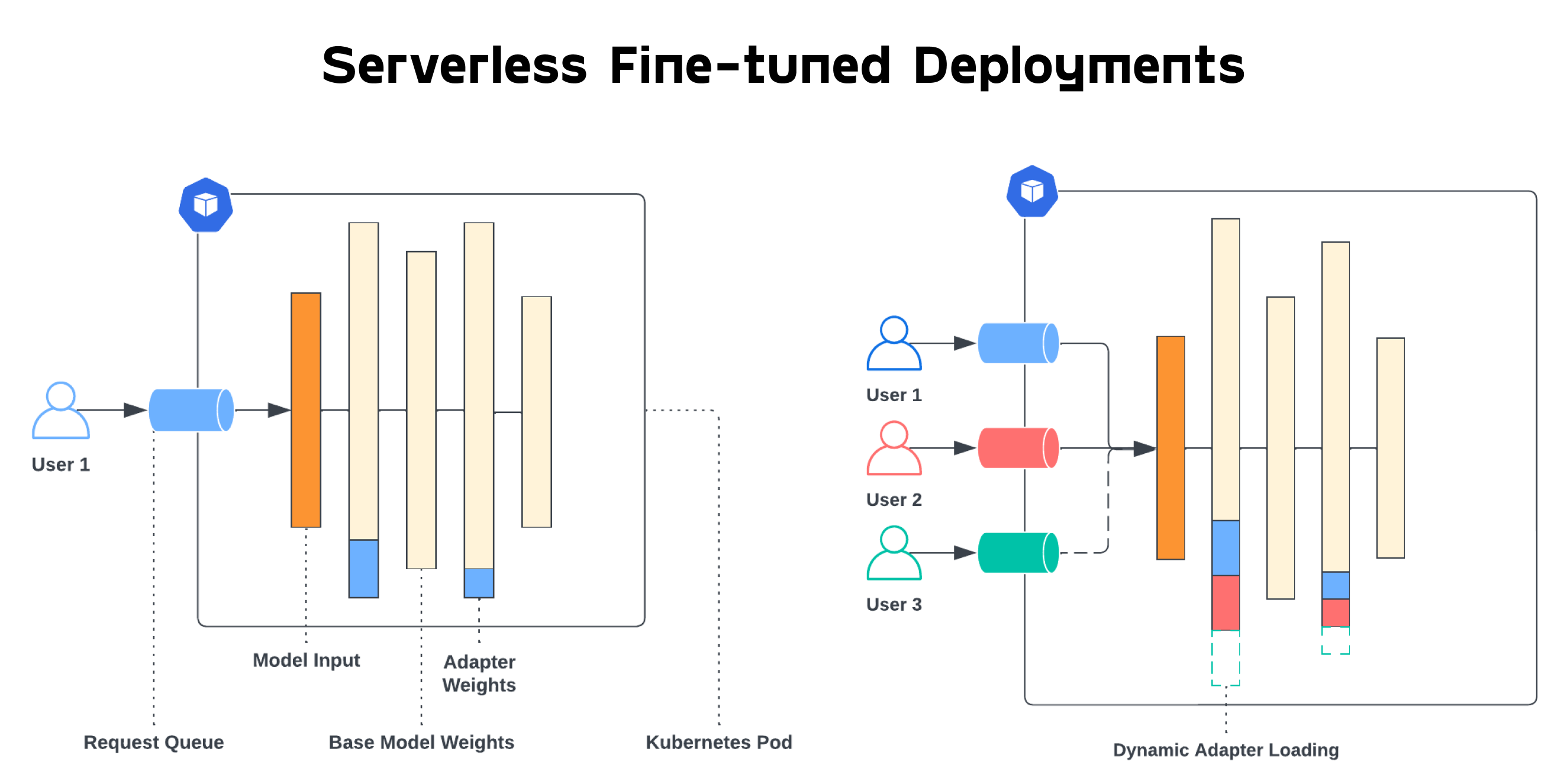

Introducing the first purely serverless solution for fine-tuned LLMs - Predibase - Predibase

Predibase on LinkedIn: Build Your Own LLM in Less Than 10 Lines of YAML

Lysandre (@LysandreJik) / X

Predibase on LinkedIn: LLM Use Case: Text Classification

CodeLlama 70B

Predibase on LinkedIn: Predibase: The Developers Platform for Productionizing Open-source AI

Efficient Fine-Tuning for Llama-v2-7b on a Single GPU

Blog - Predibase

Fine-Tuning CodeLlama for Advanced Text-to-SQL Queries with PEFT and Accelerate, by vignesh yaadav, Feb, 2024

Fine-tune Mixtral 8x7B with best practice optimization 🚀, Predibase posted on the topic