Complete Guide On Fine-Tuning LLMs using RLHF

$ 17.99 · 5 (175) · In stock

Fine-tuning LLMs can help building custom, task specific and expert models. Read this blog to know methods, steps and process to perform fine tuning using RLHF

In discussions about why ChatGPT has captured our fascination, two common themes emerge:

1. Scale: Increasing data and computational resources.

2. User Experience (UX): Transitioning from prompt-based interactions to more natural chat interfaces.

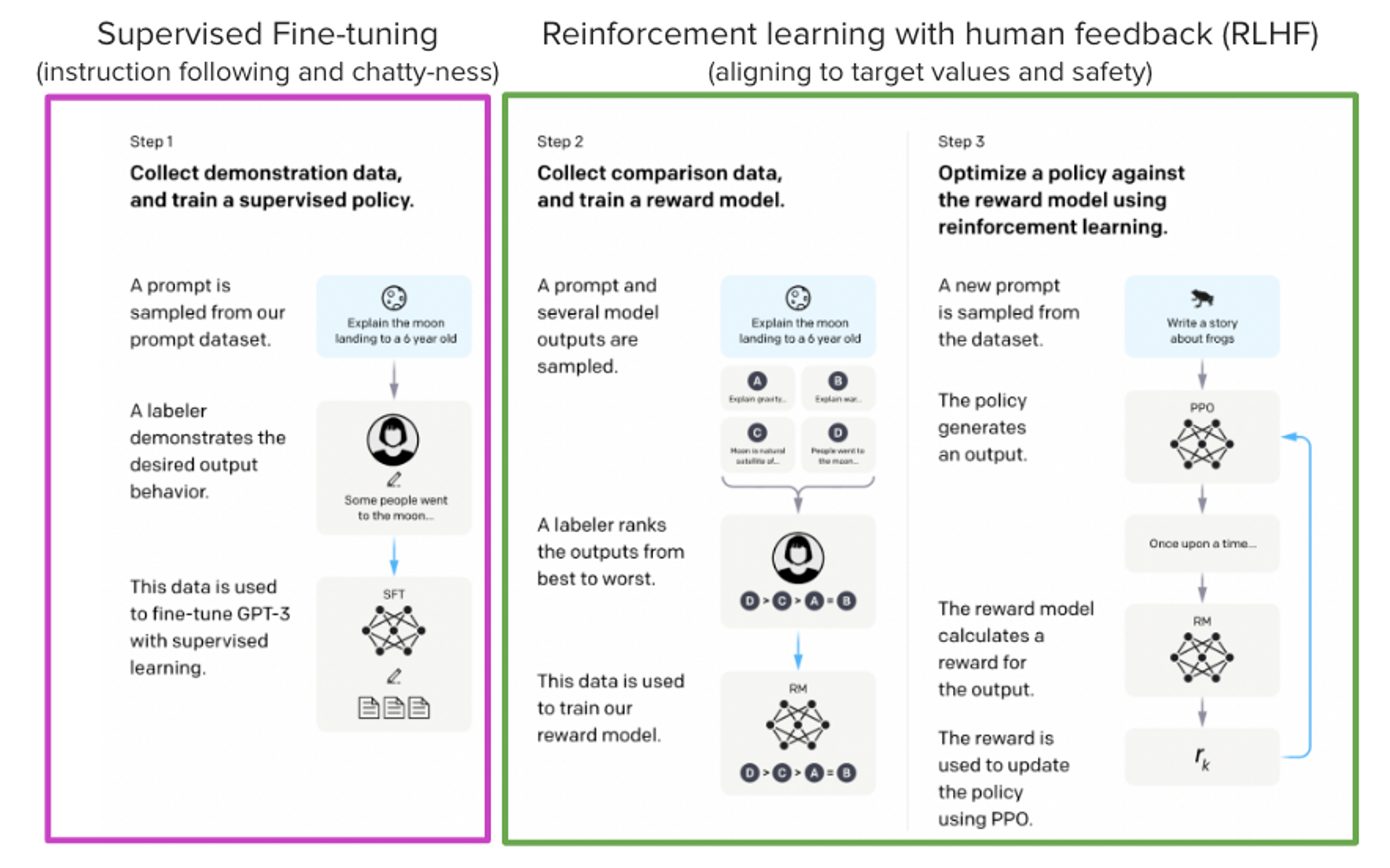

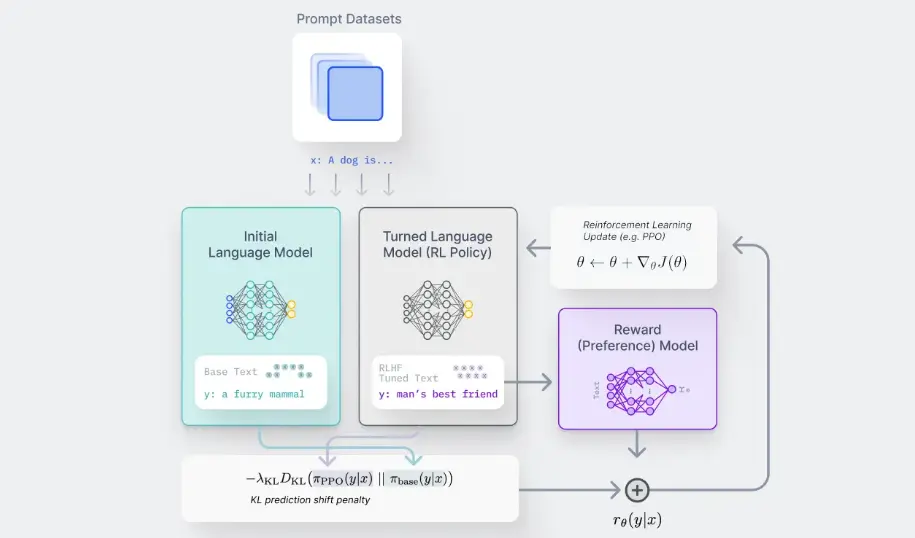

However, there's an aspect often overlooked – the remarkable technical innovation behind the success of models like ChatGPT. One particularly ingenious concept is Reinforcement Learning from Human Feedback (RLHF), which combines reinforcement learni

To fine-tune or not to fine-tune., by Michiel De Koninck

StackLLaMA: A hands-on guide to train LLaMA with RLHF

Reinforcement Learning with Human Feedback in LLMs: A Comprehensive Guide, by Rishi

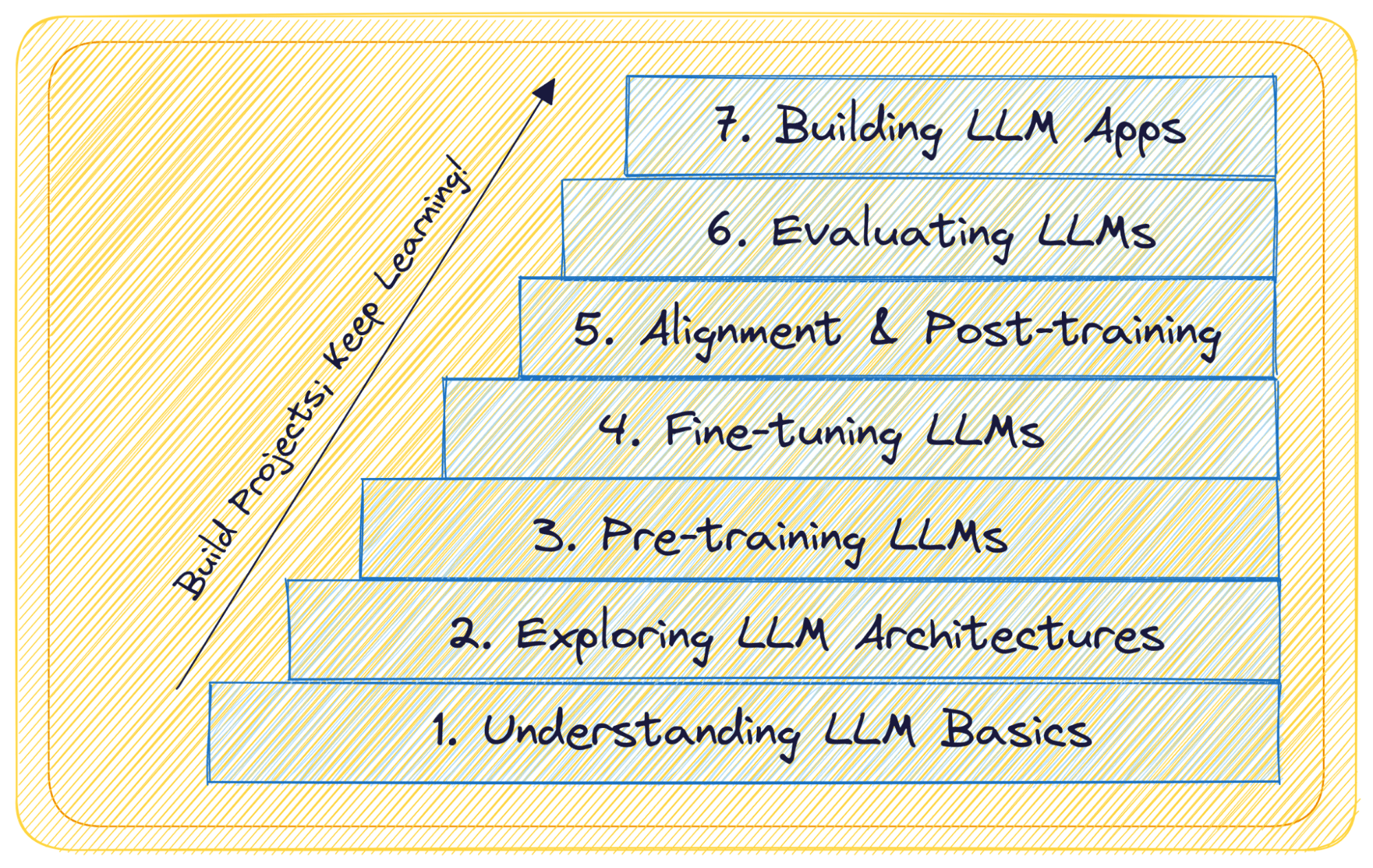

7 Steps to Mastering Large Language Models (LLMs) - KDnuggets

Reinforcement Learning from Human Feedback (RLHF), by kanika adik

Building and Curating Datasets for RLHF and LLM Fine-tuning // Daniel Vila Suero // LLMs in Prod Con

Cameron R. Wolfe, Ph.D. on X: Reinforcement Learning from Human Feedback ( RLHF) is a valuable fine-tuning technique, but people often misunderstand how it works and the impact that it has on LLM

Complete Guide On Fine-Tuning LLMs using RLHF

A Beginner's Guide to Fine-Tuning Large Language Models

Guide to Fine-Tuning Techniques for LLMs

Exploring Reinforcement Learning with Human Feedback